Overview

User research can deliver helpful insights on all aspects of UX design. But sometimes, we need to take a nuanced approach to better tease out the findings that matter to the copy and content design.

As the discipline of UX writing continues to mature, we can take a closer look at how different methods can be tweaked and re-focused to inform robust, sparkling copy in a non-commercial environment.

Why UX writing research is different

Words are used when information can't be communicated by the visual design

That means a bit of copy is successful if we know the user has understood it before they take the action we'd like them too.

Users scan read

79% of adult users don't read all the words (and many have basic reading ability), so we can't assume reasonable comprehension.

Comprehension is often subconscious

When we start making it conscious in a research setting, our results become less accurate.

Attitudes in a research setting don't match real-life behaviour

This follows the pattern found in general UX research. When it comes to copy, users in research settings say they want more explanation, but in real life their behaviour favours a clean, simple journey.

Words are more open to interpretation

Unlike design which is more universal, words and phrases can mean different things to different people in different regions, so it benefits from more investigation.

All in all, it means we have to think deeper to measure the information we want. And just to make it more of a challenge, we need to extract this intel while leaving the user's conscious layer undisturbed.

Questions to ask

When carrying out copy tests, ask the following questions to understand the insight needed.

- What does the user need to know, and at what stage?

- How much information is too much information?

- How can I tell that a user has understood the copy?

- Is the study artificially making the user conscious to the copy?

- Do the results tell us what the user has understood, or just about what the user does?

- What terminology are audiences using?

- How will the results impact the UX?

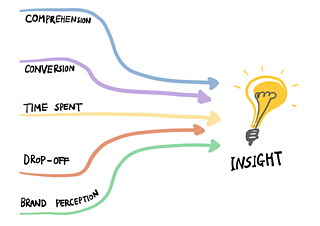

Useful metrics

Comprehension

The key measure of success is whether the user understands the content on the screen.

Conversion

In this context, conversion means that the user has taken the successful action for that screen (for example, tapping on the 'Continue' button). Ideal UX copy lifts conversion without reducing comprehension.

Time spent on page

It's useful to find out the amount of time that a user spends on a page before their action, but the data needs careful interpretation. Depending on the context, a short time on a page could either mean clear copy, or copy that isn't being read. A long time could mean complicated design, complicated copy, or justifiably long copy.

You can get a sense of which it is by looking at extra data – for example, analysing journey success, or checking comprehension.

Drop-off rates

Another metric is to check how many users start a task but don't finish it. You'll need to investigate more to understand the cause of the drop-off, but it could let you know that the copy/visual design/both can be improved.

Brand perception

The content and copy must also represent the brand experience. Successful UX writing can be mapped against this too.

Research methods for UX writing

Now comes the meaty bit: choosing a research method based on the information we want to extract. The following are especially useful when it comes to UX writing…

Unmoderated surveys

What:

Qualitative and quantitative research where participants answer questions in their own time, without a researcher overseeing it. Participants are either asked general or hypothetical questions, or shown stimulus (like a screen or a flow) and asked questions about it.

UX writing insight:

This method is particularly helpful when different copy variants are tested independently of each other – ie you show the participant one variant and interrogate them on it, then compare the results across the variants.

To get the most insight, surveys need a mix of quantitative and open-ended answers. And when conversion and comprehension survey results are analysed in tandem, it gives a deep understanding that can inform copy improvements or variants for A/B or MVT testing.

Finally, because variation in copy is often a subtle change, a large dataset is needed to gain meaningful results.

Multivariate testing (MVT) and A/B testing

What:

Quantitative testing where different versions of screens are put live in the short-term, and measured against the required metrics. The most successful version is chosen as the long term solution.

UX writing insight:

- that a copy variant is successful in conversion

- which copy variant is the most successful

Special considerations:

MVT and A/B testing are preferred methods as they show user behaviour in a live situation rather than a replicated situation.

But A/B testing only tells part of the content's story. It's the best method to show conversion rate yet it won't tell you why a user has converted – but in some contexts, that matters equally.

Misleading copy (especially when there's high conversion and low comprehension) is a poor user experience, and can lead to higher drop-off rates further down the customer journey, low engagement, and dissatisfaction with the brand.

To avoid this, variants can be tested for comprehension ahead of an A/B test. Ideally, only variants that have proved successful in comprehension should be put through to an A/B test.

Note that A/B tests work best when the copy is, or directly relates to, a call to action (CTA). This means it's limited in its scope.

Also, because variation in copy is often a subtle change, a large dataset is needed to gain meaningful results.

Quick exposure tests

What:

Qualitative or quantitative research that is similar to unmoderated surveys except participants look at a screen for a number of seconds before it disappears. Then they're asked questions about it. This tests their understanding of the screen, and highlights to us which parts are memorable.

UX writing insight:

- comprehension when scan-reading

Special considerations:

This is a preferred method because it mimics the real-life habit of users to scan-read and subconsciously take in information in a short space of time.

The comprehension questions asked afterwards are more likely to be accurate than ones which take place in more artificial scenarios.

Click tests

What:

Quantitative research that gives a user a task and records where on the prototype they click to complete it. This method can be used on one page, or across a whole user journey.

UX writing insight:

- that each step or variant is a successful user experience

- which steps or variants are the best for user experience

Special considerations:

Results are likely to be different to a live environment, where the user will think more about their decisions to complete. For example, users might merrily follow the flow to sign up to a newsletter, showing a successful click test, but if it were a live environment, they might not sign up as they would get messages in their inbox. So it tests the user experience (whether the user can perform the action they want) primarily, and conversion secondarily.

Also, like with A/B testing, it will tell you what a user has clicked on, but not their understanding of it. So it should be used in conjunction with either further questions or a test to delve deeper in the user's understanding of the screen/journey.

Competitor analysis

What:

Qualitative (and sometimes quantitative) research that analyses the approaches taken by competing companies so that you can apply it to your own company.

UX writing insight

- industry terminology

- copy/content solutions

Special considerations:

Competitor analysis is useful to understand the landscape of the content in a particular area. It's especially useful to see if there's an established best practice or whether competitors diverge in their solutions, and if phrases or copy solutions can be displayed in a more elegant manner.

But be warned that live copy/content doesn't always mean that it's been tested, optimised, or is successful, even with the biggest companies.

Also, remember that their audiences may be different (in demographic or familiarity with industry terms, for example), so copy solutions may not apply.

Conversation mining

What:

Qualitative and quantitative research to gather insights from other customer touchpoints, like call centre queries and internet forums.

UX writing insight:

- pain points

- natural language/terminology

Special considerations:

For pain points, err on the side of caution when considering complaints/calls as a widespread issue. Try and use other sources/methods to understand if it's just an issue to a handful of users, or whether it runs deeper. Copy/UI should not change until this is clear.

Part two

Check out the next article in the series, for info on advanced research techniques.

Illustrations by Poorume Yoo.